2024: Field-Based Coordination for Federated Learning

From

Simulations publicly available at:- https://github.com/domm99/experiments-2025-lmcs-field-based-FL

- https://github.com/domm99/experiments-2025-iot-self-federated-learning

Abstract

In the era of pervasive devices and edge intelligence, Federated Learning enables multiple distributed nodes to collaboratively train machine learning models without exchanging raw data, fostering privacy preservation and bandwidth efficiency. However, real-world deployments face significant challenges: data heterogeneity across devices, dynamic network topologies, and the lack of centralized control in truly distributed environments.

These simulations explore field-based and self-organizing coordination paradigms for federated learning, built on top of the Aggregate Computing framework and implemented using ScaFi. Through complementary experiments, we investigate how devices can autonomously organize into federations, aggregate model parameters, and adapt to failures in a fully decentralized manner, leveraging computational fields and spatial interaction patterns.

The following simulations are built on top of Alchemist.

Simulation Descriptions

Field-Based Federated Learning (FBFL)

This simulation explores the emergence of personalized model zones using computational fields as a distributed coordination mechanism. Devices in spatial proximity exchange model parameters through local diffusion processes, forming hierarchical regions governed by dynamically elected leaders. These leaders act as aggregators for their respective zones, enabling localized learning and self-stabilizing coordination without central infrastructure.

The implementation leverages field coordination primitives from ScaFi, including constructs for diffusion, convergence, and feedback loops. Nodes autonomously select aggregators within their neighborhoods through distributed spatial leader election, and aggregation occurs at multiple levels based on emergent spatial zones.

Key characteristics:

- Datasets: MNIST, FashionMNIST, Extended MNIST

- Coordination: Fully decentralized using computational fields

- Aggregation: Hierarchical, based on spatial proximity

- Resilience: Self-stabilizing under node failures and topology changes

The system achieves accuracy comparable to centralized FedAvg under IID settings, while demonstrating superior robustness to dynamic network conditions. Emergent spatial clusters naturally align with local data distributions, creating personalized learning zones without explicit configuration.

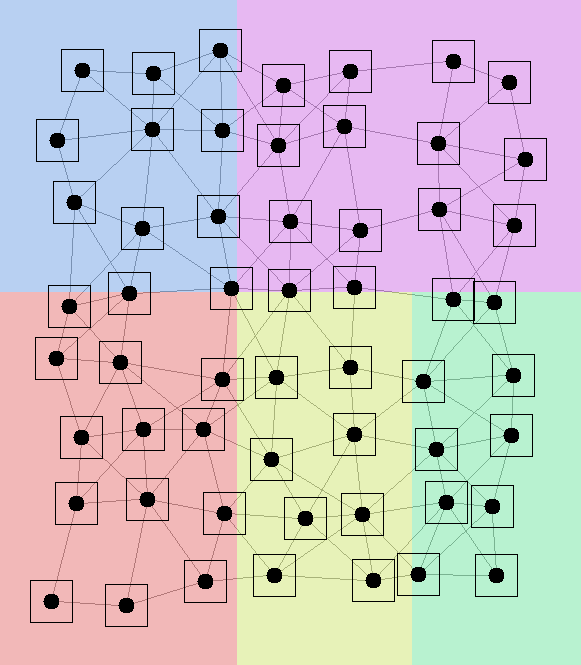

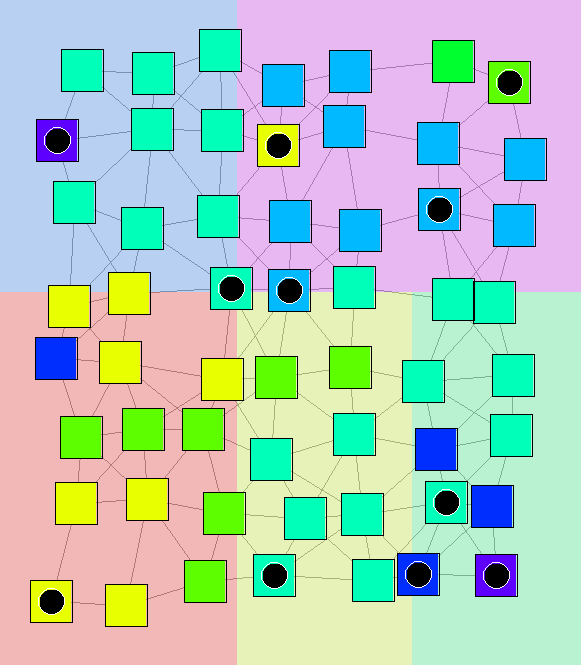

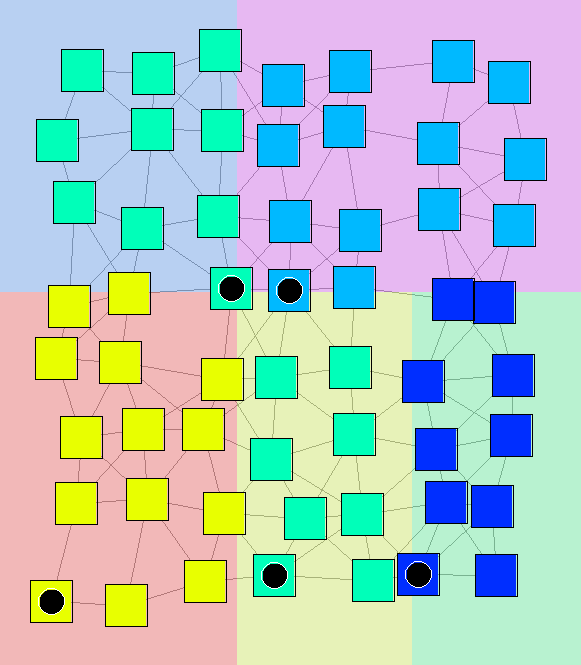

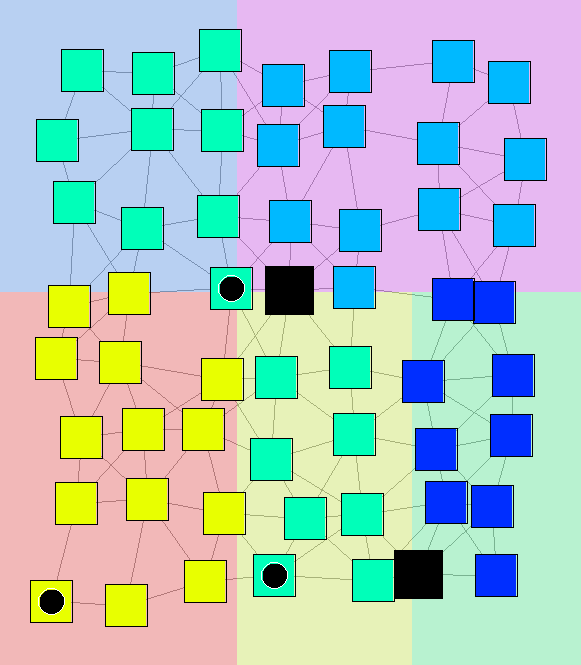

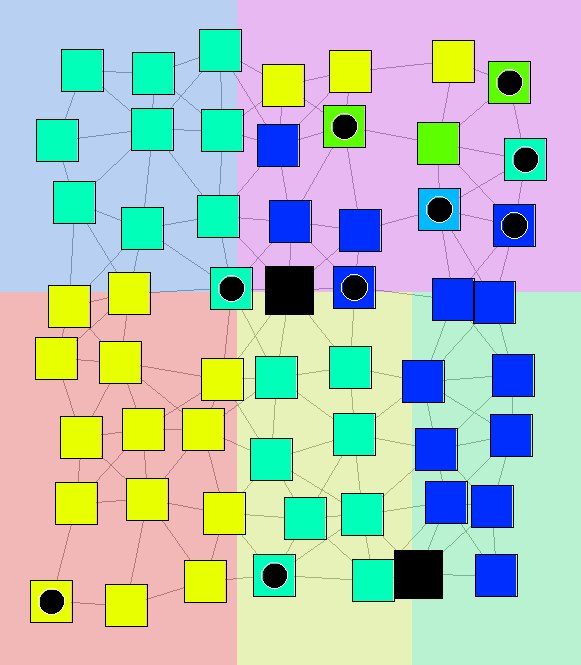

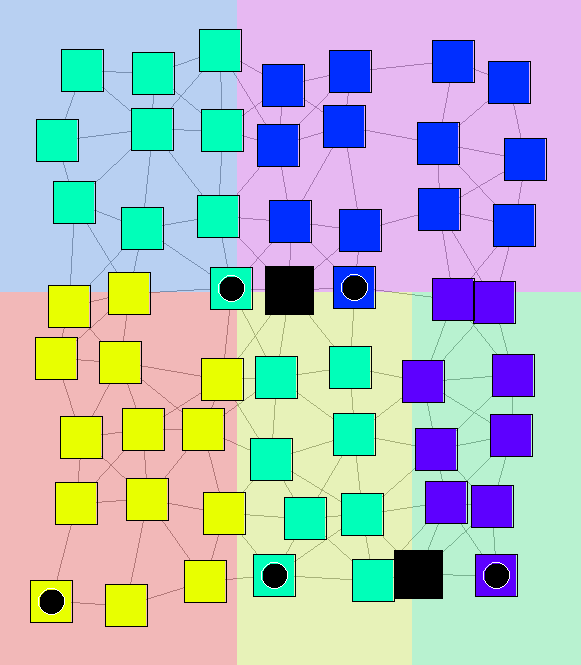

Snapshots

The following images show the evolution of self-organizing federations during a simulation where aggregator failures occur and the system autonomously recovers.

Additional Resources

The work presented here is based on multiple publications:

- Field-Based Coordination for Federated Learning at COORDINATION 2024 (DOI: 10.1007/978-3-031-62697-5_4)

- Proximity-based Self-Federated Learning at ACSOS 2024 (DOI: 10.1109/ACSOS61780.2024.00033)

- FBFL: A Field-Based Coordination Approach for Data Heterogeneity in Federated Learning submitted to Logical Methods in Computer Science (arXiv:2502.08577)

- Decentralized Proximity-Aware Clustering for Collective Self-Federated Learning submitted to Internet of Things journal